Table of Contents:

Introduction

As enterprises increasingly adopt AI systems to automate critical business processes, the question of trust becomes paramount. Explainable AI (XAI) represents a fundamental shift from black-box AI systems toward transparent, interpretable solutions that humans can understand and trust. But what is explainable AI, and why has it become essential for responsible enterprise adoption?

Explainable AI refers to artificial intelligence systems designed to provide clear, understandable explanations for their decisions and actions. Unlike traditional AI models that operate as impenetrable “black boxes,” explainable AI enables stakeholders to comprehend how and why specific outcomes were reached. This transparency is critical for enterprise adoption, where decisions must be auditable, compliant, and trustworthy.

This article will break down what explainability means in practical terms, explore why it’s critical to enterprise AI adoption, and demonstrate how Sema4.ai supports transparent AI agent decisions through our comprehensive platform. Understanding explainable AI is essential to implementing responsible and governed AI that delivers business value while maintaining trust and compliance.

What is explainable AI?

Explainable AI is a set of processes and methods that enables human users to comprehend and trust the results and output created by machine learning algorithms. At its core, what is explainable AI comes down to making AI decision-making transparent, interpretable, and accountable to human stakeholders.

Traditional AI systems, particularly deep learning models, often function as “black boxes” where the path from input to output remains hidden. Explainable AI addresses this limitation by providing mechanisms to understand how AI systems arrive at their conclusions. This includes revealing which data points influenced decisions, what rules or patterns the system identified, and how different inputs might change outcomes.

Interpretable machine learning techniques like LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations) help achieve this transparency by breaking down complex model decisions into understandable components. These methods can highlight which features were most important for a specific prediction or show how changing certain inputs would affect the output.

The relationship between explainability and interpretability is nuanced but important. While interpretability refers to the degree to which humans can understand the cause of a decision, explainability encompasses the broader ability to present the decision-making process in terms that stakeholders can comprehend and act upon. Together, they form the foundation of trustworthy AI systems that enterprises can confidently deploy in mission-critical applications.

Why explainable AI matters

The importance of explainable AI becomes clear when considering the real risks associated with black-box AI systems. Without transparency, organizations face significant challenges including algorithmic bias, compliance violations, stakeholder mistrust, and costly decision errors that can impact business operations and reputation.

AI explainability directly addresses these risks by enabling organizations to identify and correct biased decision-making patterns before they cause harm. When AI systems can explain their reasoning, data scientists and business stakeholders can detect when models rely on inappropriate correlations or discriminatory factors, ensuring fairer and more ethical outcomes.

From a regulatory perspective, responsible AI has become a compliance imperative. Regulations like the EU AI Act, GDPR’s “right to explanation,” and various industry-specific requirements mandate transparency in automated decision-making. Organizations using black-box AI systems may find themselves unable to meet these regulatory requirements, exposing them to legal and financial risks.

Trust represents another critical factor driving the need for explainable AI. Business users are more likely to adopt and rely on AI systems when they understand how decisions are made. This transparency builds confidence in AI recommendations and enables users to appropriately calibrate their trust based on the system’s reasoning quality.

Furthermore, explainable AI supports better decision-making by enabling human oversight and intervention. When stakeholders understand why an AI system made a particular recommendation, they can better evaluate whether to accept, modify, or override that recommendation based on additional context or business considerations that the AI system might not have access to.

Why is XAI important in enterprise AI?

The significance of explainable AI in enterprise environments extends far beyond basic transparency requirements. In industries like supply chain management, finance, healthcare, and government, AI decisions can have profound impacts on operations, compliance, and stakeholder trust, making explainability essential for successful deployment.

Consider enterprise supply chain AI applications, where AI agents must make complex decisions about inventory management, supplier selection, and logistics optimization. In these scenarios, supply chain AI systems require explainability to help managers understand why certain suppliers were recommended, how inventory levels were calculated, or why specific routing decisions were made. This transparency enables supply chain professionals to validate AI recommendations against their domain expertise and market knowledge. AI agents for supply chain demonstrate how explainable decision-making enhances both automation effectiveness and human oversight.

Responsible AI implementation in enterprises delivers specific benefits that directly impact business outcomes. Auditability becomes possible when AI systems can provide clear explanations for their decisions, enabling organizations to trace decision paths for compliance reviews, regulatory audits, and internal quality assurance processes. This capability is particularly crucial in regulated industries where decision rationale must be documented and defensible.

Model monitoring and maintenance also benefit significantly from explainability. When AI systems can articulate their reasoning, data science teams can more easily identify when models are degrading, encountering edge cases, or making decisions based on outdated patterns. This visibility accelerates model improvement cycles and reduces the risk of AI system failures in production environments.

User adoption represents another critical advantage of explainable enterprise AI. Business users are more likely to trust and effectively utilize AI systems when they understand the reasoning behind recommendations. This understanding enables users to provide better feedback, identify improvement opportunities, and integrate AI insights more effectively into their decision-making processes.

How Sema4.ai incorporates explainability

Sema4.ai has built explainable AI principles directly into our enterprise AI agent platform, ensuring that transparency and trust are foundational elements rather than afterthoughts. Our approach to responsible AI encompasses multiple layers of explainability designed to meet the diverse needs of enterprise stakeholders.

Our Transparent Reasoning feature provides real-time visibility into agent thought processes, showing exactly how AI agents plan, reason, and make decisions throughout their workflows. This transparency enables users to understand not just what an agent decided, but why it reached that conclusion and what factors influenced its reasoning. Business users can observe agent decision-making in real-time, building confidence in autonomous operations while maintaining oversight capabilities. Learn more about how agents think and act.

Agent decision logs capture comprehensive records of all agent actions, including the reasoning behind each decision, the data sources consulted, and the rules or patterns that influenced outcomes. These logs provide complete audit trails that support compliance requirements while enabling continuous improvement of agent performance. Organizations can trace any agent decision back to its source data and reasoning process, ensuring accountability and enabling root cause analysis when needed.

The Sema4.ai platform implements role-based access to explanations, ensuring that different stakeholders receive appropriate levels of detail based on their responsibilities and expertise. Business users might see high-level reasoning summaries, while data scientists can access detailed model outputs and technical explanations. This layered approach ensures that explainability serves all stakeholders without overwhelming any particular audience.

Our natural language runbooks contribute to explainability by enabling business users to define agent behavior in plain English. This approach creates inherent transparency because the agent’s instructions are expressed in human-readable terms that stakeholders can review, understand, and modify as needed. The alignment between natural language instructions and agent behavior creates a clear connection between business intent and AI execution.

What is an example of explainable AI?

To illustrate explainable AI in practice, consider a concrete explainable AI example from enterprise finance operations: an AI agent responsible for invoice processing and approval decisions.

In a traditional black-box system, an invoice might simply be approved or rejected with no explanation, leaving finance teams unable to understand the reasoning or validate the decision. This opacity creates risk, reduces trust, and makes it difficult to improve the system over time.

With explainable AI, the same invoice processing agent provides clear reasoning for each decision. For example, when processing a $15,000 software license invoice, the agent might explain: “Invoice approved based on: (1) vendor matches approved supplier list, (2) amount falls within department budget allocation, (3) purchase order PO-2024-1847 exists and matches invoice details, (4) department head pre-approval obtained via email on March 15th, and (5) software category aligns with IT infrastructure budget line item.”

This explanation enables finance professionals to validate the agent’s reasoning, identify any potential issues, and understand exactly why the decision was made. If the invoice had been rejected, the agent might explain: “Invoice requires manual review because: (1) vendor not found in approved supplier database, (2) amount exceeds department manager approval threshold of $10,000, and (3) no matching purchase order found in system.”

Our invoice reconciliation solutions demonstrate this explainable approach in action, where AI agents not only process invoices but provide detailed reasoning that finance teams can review, validate, and use to improve processes over time. This transparency transforms AI from a mysterious automation tool into a collaborative partner that enhances human decision-making while maintaining accountability and trust.

Generative AI vs. explainable AI

Understanding the relationship between generative AI and explainable AI is crucial for enterprise leaders evaluating AI solutions. While these concepts address different aspects of AI functionality, they often intersect in enterprise applications and can complement each other effectively.

Generative AI focuses on creating new content, whether text, images, code, or other outputs, based on patterns learned from training data. Large language models like GPT-4 or Claude exemplify generative AI by producing human-like text responses to prompts. The primary goal is content creation and generation rather than decision explanation.

Explainable AI, in contrast, emphasizes transparency and interpretability in AI decision-making processes. AI explainability is concerned with helping humans understand why an AI system made a particular choice, what factors influenced that decision, and how different inputs might change the outcome. The focus is on transparency and trust rather than content generation.

However, these approaches often coexist in enterprise AI systems. For example, a generative AI system might create a business report while simultaneously providing explanations for why certain data points were emphasized, which sources were prioritized, or how conclusions were reached. This combination delivers both the creative capabilities of generative AI and the transparency benefits of explainable AI.

In Sema4.ai’s platform, we leverage both approaches strategically. Our AI agents use generative capabilities to create natural language explanations of their reasoning processes, while our explainable AI features ensure that these explanations are accurate, relevant, and trustworthy. This integration enables agents to communicate their decision-making processes in accessible language while maintaining the transparency and accountability that enterprises require.

The key distinction lies in primary purpose: generative AI excels at creating outputs, while explainable AI excels at explaining decisions. Enterprise success often requires both capabilities working together to deliver solutions that are both powerful and trustworthy.

Is explainable AI still relevant?

Despite the rapid evolution of AI technology, explainable AI remains more relevant than ever for enterprise adoption. Some misconceptions suggest that XAI is outdated or unnecessary given advances in AI capabilities, but the reality is that AI explainability becomes increasingly critical as AI systems grow more sophisticated and pervasive in business operations.

The complexity of modern AI systems actually amplifies the need for explainability rather than reducing it. As AI agents handle more complex tasks, make more consequential decisions, and operate with greater autonomy, the ability to understand and validate their reasoning becomes essential for maintaining control and trust. Organizations cannot afford to deploy AI systems that make important business decisions without providing clear explanations for their actions.

Regulatory trends reinforce the continued importance of responsible AI and explainability. New regulations worldwide are strengthening requirements for AI transparency, algorithmic accountability, and automated decision-making oversight. Organizations that ignore explainability requirements may find themselves unable to comply with evolving regulatory frameworks, creating legal and operational risks.

Furthermore, as AI adoption matures beyond early experimentation phases, enterprises are discovering that explainability is essential for scaling AI initiatives successfully. Initial AI pilots might succeed without extensive explainability, but enterprise-wide deployment requires the trust, accountability, and governance that only transparent AI systems can provide.

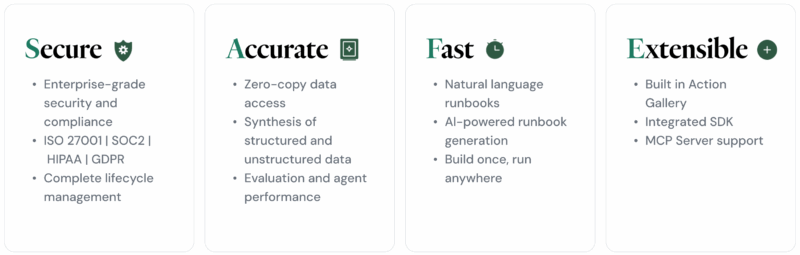

Sema4.ai’s commitment to explainable AI, and to delivering SAFE agents (Secure & governed, Accurate and explainable, Fast and easy, Extensible and adaptable) reflects our understanding that transparency is not a temporary requirement but a fundamental characteristic of trustworthy enterprise AI. Our platform’s explainability features are designed to evolve with advancing AI capabilities, ensuring that transparency and trust remain central to our solutions regardless of underlying technological changes.

Building trust through transparent AI

The future of enterprise AI depends on building and maintaining trust through transparency, accountability, and explainability. Explainable AI represents more than a technical capability—it’s a fundamental requirement for responsible AI adoption that delivers business value while maintaining stakeholder confidence.

Organizations that prioritize explainable AI position themselves for sustainable AI success. By choosing transparent AI solutions, enterprises can navigate regulatory requirements, build user trust, enable effective governance, and create AI systems that enhance rather than replace human judgment. The investment in explainability pays dividends through reduced risk, improved adoption, and more effective AI-human collaboration.

Sema4.ai’s Enterprise AI Agent Platform demonstrates how explainability can be seamlessly integrated into powerful AI solutions without sacrificing performance or capabilities. Our transparent reasoning, comprehensive audit trails, and natural language explanations ensure that enterprises can deploy AI agents with confidence, knowing that every decision can be understood, validated, and improved over time.

Get started with enterprise AI agents that prioritize transparency and trust. Explore our AI Agent Learning Center to discover how Sema4.ai empowers enterprises with explainable, governed AI agents that deliver business value while maintaining the accountability and oversight that modern organizations require.