Cognitive architectures explained — what’s inside an enterprise AI agent

Introduction to enterprise AI agents: What is an enterprise AI agent.

Agents are the most exciting new paradigm in enterprise software because they exhibit capabilities we haven’t seen before in software. Artificial intelligence applications come in many different shapes and sizes, and not every AI application is considered an agent. Here, we will unpack what exactly makes an AI application agentic.

To understand AI agents, we first need to know how LLMs work, their characteristics, and how AI engineering is used to get us from predicting the next word in a sequence to software that can reason, collaborate, and act.

Understanding these concepts is important even for non-developers because it helps us apply agents to solve real-world problems and removes some misconceptions about the technology behind them.

A fully featured agent is a complex and intricate application that leverages large language models in interesting ways, but it all starts with a few basic concepts – planning, reflection, and tools. But first, let’s start by dissecting how an LLM really works.

Building blocks of an AI agent (credit, Harrison Chase)

From tokens to thinking, with LLMs

If you look at an LLM under the hood, they are programs with no more than some hundreds of lines of code and a massive file of model weights that are learned through training. The only task an LLM has been trained to do is to predict the next token in a sequence of tokens (context) it has been given. Tokens are a unit of text LLMs process – you can think of them as words even though they are not exactly the same.

When you use an LLM through prompting, you give the model some text, and the model will return the next token. Then, you repeat this until you are satisfied with the answer. For example, if you start with “Mary had a …“ the model will give you back “… little lamb” or some variation of this depending on which model you are using (I tried this with Llama3-8B).

This is impressive on its own, but what really makes these token-predicting machines exciting is that through their training on vast amounts of internet texts, LLMs have encoded a world model into their weights. This allows LLMs to reason about their context in a limited way.

LLM reasoning is limited because they use a constant amount of computation to generate each token through a complex process of statistical prediction. Give it a complex problem to solve, like writing a blog article based on your specifications, and it will take precisely the same amount of computation to generate the answer as it took to figure out that Mary had, indeed, a little lamb.

This is far from how humans work. We think about complex problems for a long time, break them into more manageable sub-components, and plan how to solve them. This is where AI engineering, planning, reflection, and agents come in.

Tools of the trade for AI

Before we go any further into breaking down agents, there’s one more important concept to unpack, namely tools and how they are used for AI actions.

As mentioned earlier, LLMs simply predict tokens in a sequence. An LLM alone cannot search the web, execute Python code, or fetch information from a CRM system. It is limited to the context we give it in our prompt and static model weights that have been learned during training.

In order to give hands to AI and help it reach beyond its intrinsic limits, we can introduce external capabilities that the LLM can choose to use to achieve its task. This concept is similar to how humans use tools to augment our capabilities.

If someone asks you to solve a simple problem, “What is 1 + 123^4?” you immediately know what steps you need to take. First, raise 123 to the power of four and then add one to the result. But 123^4 isn’t something we do in our head. Instead, you reach for a calculator, a tool that helps you solve the calculation and get to 228 886 642.

This is exactly how AI uses tools, and the list of available tools is provided to the LLM as part of its prompt sequence. However, it is important to note that when using a tool, the LLM itself does not call the tool in question. It simply generates a text response that describes what tool should be called and what input data should go into that call. It is up to the program that prompts the LLM to ultimately call that tool correctly.

The terms tool and action are often used interchangeably, and the ability to use them is often referred to as function calling.

Tool – Open-ended capability: browser search, calculator

Action – Pre-determined workflow: add a new user to CRM, create a new purchase order

The ability to correctly identify when to use tools and give accurate instructions to do so depends on the characteristics of the LLM. Some LLMs have been specifically fine-tuned to use tools by showing example prompts and responses with tool-calling instructions. LLMs that can perform this reliably are in high demand as agents are becoming more widely used.

Cognitive architectures for AI agents – plan, execute, reflect

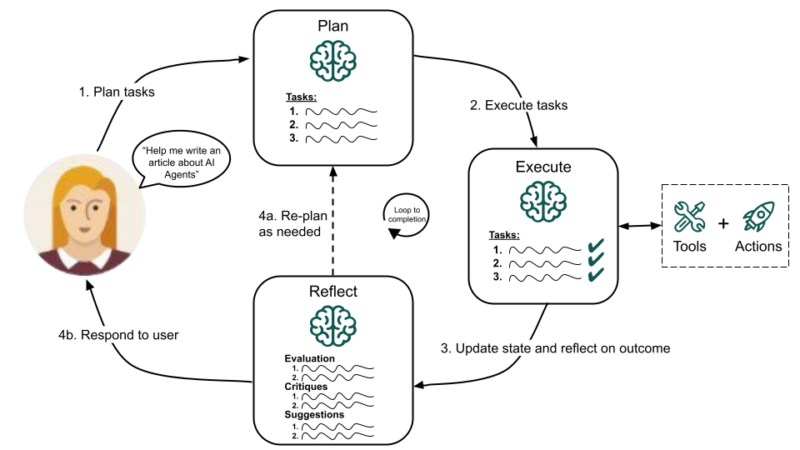

Now that we understand how LLMs work and how tools and actions are connected to LLMs, we can start putting together the building blocks of an AI agent. The structure and design pattern of an LLM program is commonly called cognitive architecture, and there are dozens of different architectures and variations of them that fit specific problems.

What makes an architecture agentic is the use of techniques like planning and reflection that introduce feedback mechanisms into the LLM reasoning loop. We can’t make an LLM think deeper and harder with one prompt, but we can call it multiple times and leverage the limited reasoning capability of an LLM to break down tasks and evaluate (reflect) its results against the given task. We can also work around the LLM’s limits with tools and memory of previous interactions.

Let’s take, for example, the task of writing a blog article. A human will first break down this task into parts like defining the audience, researching the topic, creating an outline, writing a first draft, reviewing the draft, and finally modifying it until satisfied with the result. If you ask ChatGPT to write a blog for you, it is the human equivalent of writing an article non-stop from start to finish, all in one go, and then calling it done.

The agentic way of writing a blog article follows precisely the same patterns as the human way of writing it. During this workflow, the agent application will call an LLM dozens of times with different prompts. One way to implement this would be a plan-execute cognitive architecture.

Plan-execute cognitive architecture (credit, LangChain)

If we implement a blog writing agent with this article, its execution and prompting flow would look something like this on a very high level:

Objective: Write an article about AI agents

Agent reasoning and execution:

- Formulate task plan:

- Research AI agents

- Create an article outline

- Write subsections

- Create illustrations

- Execute task 1: Research AI agents

- Tool use: Wikipedia search on top 10 relevant articles

- Tool use: Google search for recent AI agent stories

- Rank top 5 sources against objective and store summaries into source documents

- Re-plan:

- Based on the results of task 1, we can proceed to task 2. There is no need to update the task list.

- Execute task 2: Create an article outline

- Based on source document summaries, find three main topics for the article and write the outline of the article

- Re-plan:

- The article outline reflects the given objective. Move to the next task.

- Execute task 3: Write the first draft of the first section.

- …

- …

Based on this example, it is easy to see why an agent can fluently perform tasks where a simple chatbot will fail miserably. The agent has the benefit of multiple LLM calls and an externally enforced framework for planning, reasoning, reflecting, and evaluating its progress against the objective.

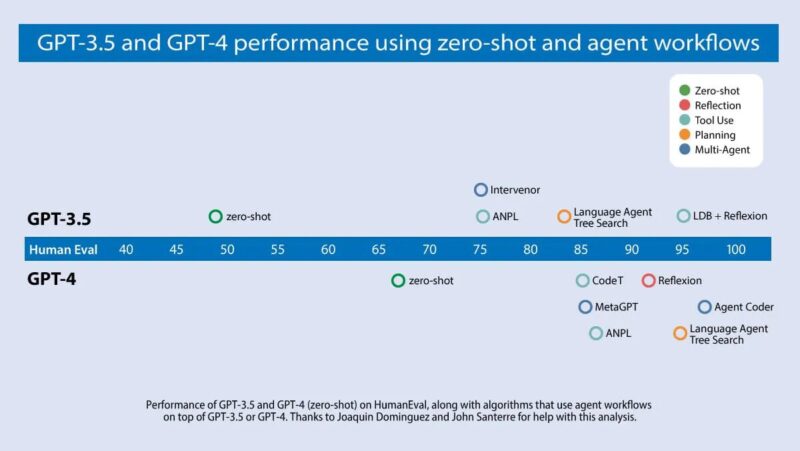

The figure below illustrates this. In a coding benchmark study, the GPT-3.5 model was shown to double its performance from 48% to 95% with a cognitive architecture that introduces tools and agentic reflection over simple direct prompting. Building the right cognitive architecture outweighs raw model horsepower in most real-world tasks!

Real-world task performance with agents (credit, Andrew Ng, DeelLearning.ai)

What’s next in AI intelligent agents

Agents are driving forward a wave of innovation in AI applications. New cognitive architectures are being researched and developed while the demands of agents are influencing the next generation of foundation model development. Function-calling, the speed and latency of responses, and the ability to follow instructions have become increasingly important characteristics.

The LLM’s superpower is its flexibility to react and adapt to new situations and input in a way that software has never been able to do before. However, the LLM also has inherent limitations in how complex reasoning it can perform. Agents are a way to design around these limitations and build LLM-powered applications that perform human-like tasks with speed and accuracy.

In addition to LLM improvements, breakthroughs in agents are enabled by innovation in areas such as human-agent collaboration, agent planning, enterprise data context for agents, and agent security.