Last week’s launch of OpenAI Frontier marked something bigger than a product announcement. It was an architectural declaration, one that signals where the entire enterprise AI industry is heading. And for those of us who have been building in this space, it felt less like news and more like confirmation.

Three ideas at the heart of the announcement deserve attention, because they define the blueprint for how AI agents will actually work inside enterprises:

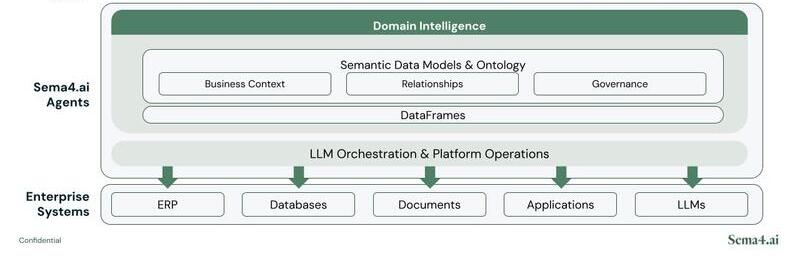

1. Complex domain-specific work needs a new orchestration layer, one fundamentally powered by LLMs.

This isn’t about bolting a chatbot onto a workflow engine. The orchestration layer itself must be intelligent. It must be capable of planning, adapting, and executing across systems in ways that traditional automation simply cannot. When agents can reason over data, work with files, run code, and use tools within an open execution environment, you’re looking at a fundamentally different kind of enterprise infrastructure.

2. A new semantic layer must harmonize knowledge, data, and existing applications.

This is the idea that resonated most deeply with us. The announcement described it as “a semantic layer for the enterprise that all AI coworkers can reference to operate and communicate effectively.” That’s not just product positioning, it’s an architectural truth. AI agents fail when they lack context. Data is scattered across systems, permissions are complex, and every integration becomes a one-off project. The semantic layer is what makes agents actually work, giving them a unified understanding of how information flows, where decisions happen, and what outcomes matter.

3. The agent lifecycle demands a comprehensive control tower from onboarding to continuous improvement.

The best framing we’ve seen models the agent lifecycle after how enterprises scale people: onboarding, institutional knowledge transfer, learning through experience, performance feedback, and governed access. Build, run, manage — it’s the full cycle, and it needs purpose-built infrastructure.

Our view from the trenches

At Sema4.ai, we’ve been living inside this architecture, specifically the semantic layer at its center. It’s literally in our name. We build the infrastructure that translates domain understanding of business documents, data sources, and line-of-business applications into something AI agents can work with — with full fidelity and accuracy.

What we’re seeing with our customers confirms the pattern. What started as SOP-style runbooks has evolved into a rich tapestry of skills, workflows, tools, and their combinations. This is powering a wide variety of data-centric agentic use cases across both line-of-business workflows and business analyst functions. The semantic layer is the key enabler: it’s what allows agents to understand not just what data exists, but what it means in context.

The AI augmented system of record

Just as CRM became the system of record for customer relationships and ERP for financial operations, the semantic layer is augmenting the system of record for how AI understands and operates within an enterprise. It’s the connective tissue that makes the difference between isolated AI experiments and agents that actually do real work.

The industry is converging on this architecture. We’ve been building it. And we’re just getting started.